Enhanced AI: Artificial Learning and Thinking

AI Chat Bot

A chatterbot is usually written with XML based toolkits and architectures, that comprises the dialog system and the framework to develop it. The responses to the usual questions are stacked in its database, so when a human tries to ask something usual it identifies the keyword and replies with an answer, usually from a random choice of similar statements.

The real touch of an Artificially Intelligent system is to interact with the real people with all the regular sarcasms and sparks that a real human holds. It should also engage in a real conversation that matches up to the level of a human to human talks, so that it could easily pass all the Turing tests. What it takes to enhance the system of the bots to show itself as a far better AI stuff, than a mere mimic?

The Reality of AI Assistants

Neither JARVIS nor HAL is capable of thinking on its own in reality. It’s been a challenge in the artificial reality world to build a versatile AI assistant that could learn itself from the conversations and reply them with a statement that is generated by the system itself; in a laymen language, “Making a computer program to improvise”.

To improvise, any system needs a basic knowledge to start with, or more like an object of methods to tell the system how to learn from the real world interactions. DeeChee, a humanoid robot is capable of learning languages naturally from conversations is a good example for artificial learning. Similarly, a method that involves the rendition of human conversation patterns and adapting to such will be a great challenge in enhancing the systems. It will literally make the bots to process and think on its own as it learns the patterns and analyse the statements.

Primitive AI Sparks

Futuristic AI like JARVIS (well… the list usually should start with HAL, R2D2 and C3PO) would need an algorithm (Complex) that is capable of understanding the natural speech of a human, isolating it from the noise and processing it with the pattern database and replying a whole new statement that is created ultimately for that conversation. The voice recognition systems that we use now, are extremely primitive. SIRI, Dragon Naturally Speaking and Nina from Nuance has promising hopes, as the Nuance’s database is filled with all the conversational data in its cloud that is required by the software to learn.

Forecast

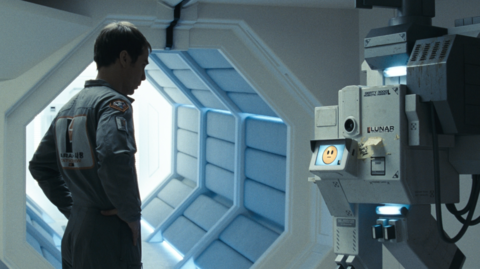

Beyond the limits of the current expectation, the system should understand the different emotional moods of the human, with the aid virtual recognition technology and tonal mappings of his voice. This was shown in the movie ‘Moon (2009)’, as an adorable robot that understands the lonely astronaut’s emotional status and could react and reply emotionally with an emoticon displayed on its screen (GERTY):

A whole new form of versatile AI assistant that can think and talk by itself without any dialogue database is still a concept though. When technologies like natural learning, cloud voice database like Nuance and language mapping is made more effective, it could pave a way for a better artificial assistance. Breaking the barriers of the constraints of current technology, would take a decade for the least to prep the platform for such a complex innovations that involves colossal challenges.

Similar Swipe:

Terminator Intelligence: Are We There Yet?

This post was first published on September 10, 2012.